SEO is constantly evolving – for the sake of making a better Internet for all its users. Its path of evolution can get really bumpy at times. That is because Google constantly updates its search algorithm and raises the bar, such as on what’s considered high quality content.

This time, something rare and dramatic happened – and the world of SEO is in an uproar. What did Google do and how has it changed online search? Read on, you don’t want to miss this one.

1. What did Google do?

Long story short, Google has implemented new measures to prevent scraping of the SERPs (search engine result pages) for information – such as ranking positions.

Now the truth is, automated rank checking has been against Google’s guidelines for a long time. Here’s a direct quote from their spam policies:

Machine-generated traffic (also called automated traffic) refers to the practice of sending automated queries to Google. This includes scraping results for rank-checking purposes or other types of automated access to Google Search conducted without express permission. Machine-generated traffic consumes resources and interferes with our ability to best serve users. Such activities violate our spam policies and the Google Terms of Service.

And yet SERP scraping has been widely used to collect data. So why did the situation suddenly change?

For one, blocking SERP scrapers is a difficult, resource-heavy task. Until now, scrapers had ways to avoid blocking – for example, by changing their IP address. No doubt the rise of AI tools has made scraping even easier, which would very likely prompt Google to act sooner or later.

So it appears Google has had a breakthrough and is on the offensive. Now the SEO tool providers are forced to adapt to the new reality.

2. What problems did it cause?

It goes without saying that website rankings are the most vital information in SEO. That’s the entire point of SEO: to increase your site rankings and appear in the top search results.

With Google’s new anti-scraping measures in place, it has become much harder for SEO tools to obtain the necessary data. Namely:

- Keyword rankings;

- Web traffic estimates;

- Competitive analysis.

While it’s still possible to obtain all this data, the process has slowed down drastically. It’s easy to see what sort of problems it’s causing:

- Outdated SEO information. By the time SEO tools finally gather the data, site rankings and traffic may have already changed.

- Disruption of the users’ workflow. It’s risky to run a digital marketing campaign without up-to-date information about its performance.

- Potential cost increase. SEO tool providers have to find workarounds for this problem, which in the end may make the tools more expensive for their users.

Curiously, not all SEO tools have been affected. Even among the biggest names in the industry, some were hit while others kept working like nothing happened. This suggests Google might be in the process of refining its anti-scraping mechanisms, and similar problems may arise again.

3. New reality of search

As a potential solution, some users have suggested that Google release a paid API for accessing its SERP data. Google is yet to comment on this idea, but it may be prudent to prepare a budget for this possibility.

In the meantime, SEO tool providers are actively developing their own solutions that will allow them to keep gathering SEO data in real time. Many of them have already found a way out of the crisis and are fully operational again.

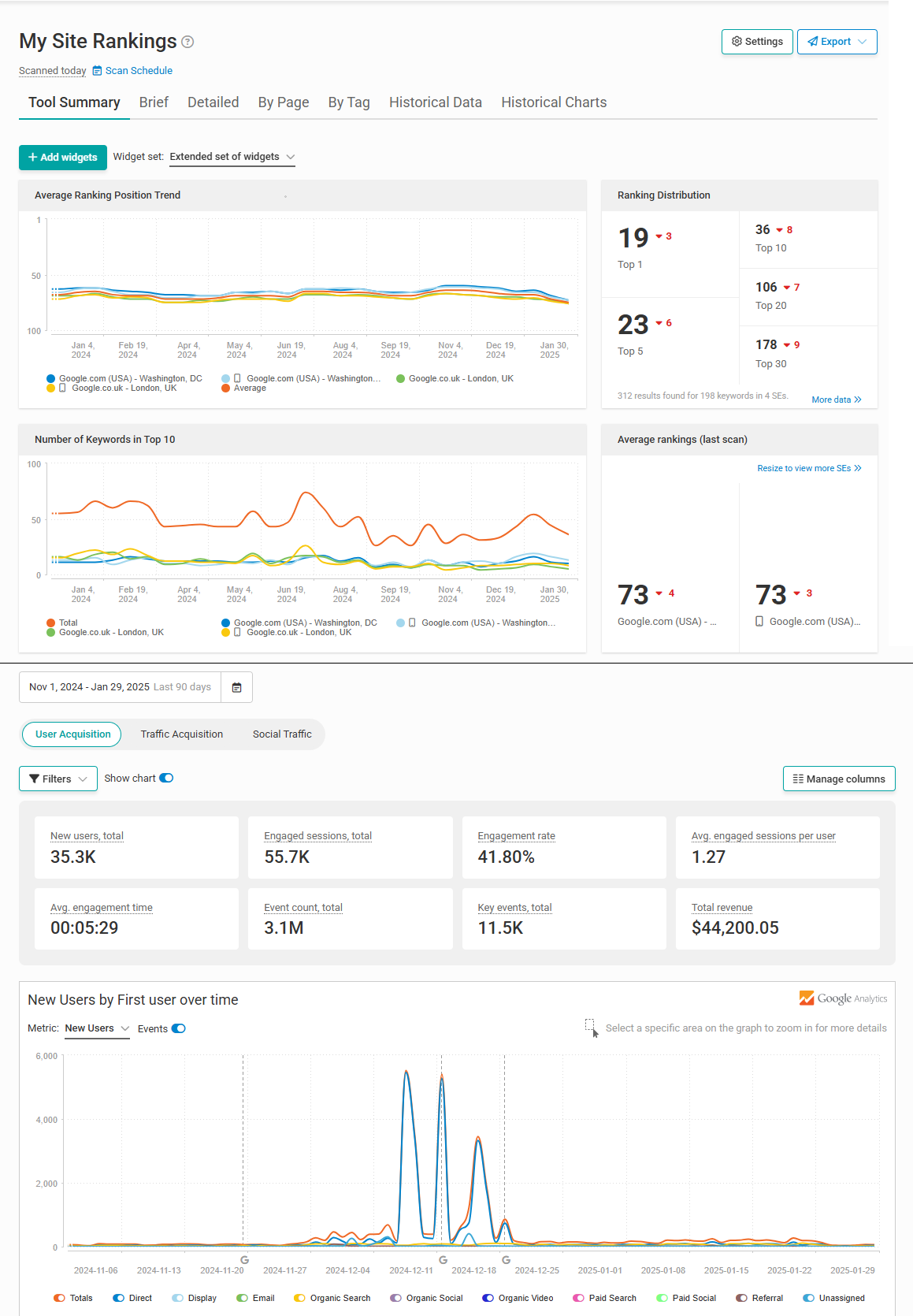

And we are proud to announce that WebCEO is one of the winners! Our rank tracking method was adjusted correspondingly, and so we keep providing real-time rankings as we have been doing for years at no quality loss. Our Rank Tracking and Traffic Analysis tools are displaying the most up-to-date information – feel free to check.

Just don’t get too excited and run multiple scans every day. Even before Google cracked down on automatic SERP scanning, the rankings data had never been so quick to update. It would take at least 24 hours to refresh, normally a few days.